The SGOC is a kernel-managed cache. This is a new type of cache available in Dynamics AX 2012. Unlike the SysGlobalCache in AX2009 and older versions which has the session scope, SysGlobalObjectCache is truly global in nature. The data stored from one user connection is available for all the users.

SGOC stores Key-Value pairs. Both Key and Value in the SGOC must be containers. This is because containers are passed by value and the content stored in them is not influenced by the changes happen to the variables externally.

There are some basic behavior differences between SGOC and the other caches.

- Unlike kernel data caching, application must manage updating/flushing the cache. The kernel has no way of knowing when some cached data is no longer valid, so it is the application’s responsibility to manage this and clear at the appropriate times.

- Unlike kernel data caching, checking to see if something exists in the cache when it doesn’t will notcause an RPC to query the data from the server. With normal caching on a table there was no way to avoid this extra call on a cache miss. The SGOC will not cause any extra calls. The clearing of data is piggy-backed on other existing RPC calls between machines

- Unlike the SysGlobalCache, the SGOC will propagate “clear” calls to all clients and other AOS instances. So if the application clears data from a cache scope on one client, all other clients and AOS’s will be cleared. The same happens if the application clear it on an AOS. This is useful when a user performs an operation which invalidates some data in the cache.

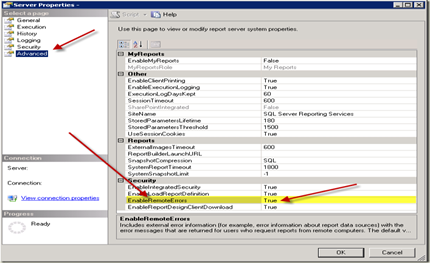

SGOC is an LRU cache. When the cache is full, the least recently used element will be removed to accommodate newer element. Sizing the SGOC correctly will pay significant performance improvement over poorly sized SGOC setting. The number of elements Global Object cache can hold is defined in Server Configuration form under performance Optimization fast tab.

Simple Code Example

Here is a simple code example to illustrate the behavior/usage of SGOC. Use always an application class to abstract the access and manipulation of SGOC for specific scopes. Example ‘DimensionCache’ or ‘PriceDisc’ Class.

public static int64 GetCustOpenSalesOrderCounts(str 20 _myCustId)

{

SalesTable salesTable;

container conSGOC;

int64 salesOrderCounts;

{

SalesTable salesTable;

container conSGOC;

int64 salesOrderCounts;

// Create a new instance of the SGOC class. Note that this will "connect to" the current instance of the global cache, so values pushed in with one instance will be available with other instances. SysGlobalobjectCache sgoc = new SysGlobalObjectCache();

//get the value from cache for the (Scope, Key) combination conSGOC = sgoc.find('CustOpenSalesOrderCounts', [_myCustId]);

//Check the return value of the container for conNull, if the container is null, there is no value exist for that scope,key combination if(conSGOC == conNull())

{

//Do the business process here select count(RecId) from salesTable where salesTable.CustAccount == _myCustId &&

salesTable.DocumentStatus == DocumentStatus::None;

{

//Do the business process here select count(RecId) from salesTable where salesTable.CustAccount == _myCustId &&

salesTable.DocumentStatus == DocumentStatus::None;

salesOrderCounts = salesTable.RecId;

// Push an element into the cache. The first parameter is the scope, which identifies the cache. The second parameter is the key for looking up and the third parameter is the value. Both Key and values are containers by type.

sgoc.insert('CustOpenSalesOrderCounts', [_myCustId], [salesOrderCounts]);

}

else

{

salesOrderCounts = conPeek( conSGOC, 1);

}

return salesOrderCounts;

}

}

else

{

salesOrderCounts = conPeek( conSGOC, 1);

}

return salesOrderCounts;

}

public static void main(Args _args)

{

int counter;

str 20 myCustId;

int64 salesOrderCounts;

{

int counter;

str 20 myCustId;

int64 salesOrderCounts;

for (counter = 1; counter <= 25; counter++)

{

myCustId = 'E'+int2str(10000+counter);

salesOrderCounts = DemoSGOCServer::GetCustOpenSalesOrderCounts(myCustId);

info(myCustId+' : '+int642str(salesOrderCounts));

}

}

{

myCustId = 'E'+int2str(10000+counter);

salesOrderCounts = DemoSGOCServer::GetCustOpenSalesOrderCounts(myCustId);

info(myCustId+' : '+int642str(salesOrderCounts));

}

}

Example from Product

DimensionDefaultingService:

server private static LedgerDimensionAccount serverCreateLedgerDimension(

RecId _ledgerDimensionId,

DimensionDefault _dimensionDefault1 = 0,

DimensionDefault _dimensionDefault2 = 0,

DimensionDefault _dimensionDefault3 = 0)

{

container cachedResult;

XppILExecutePermission xppILExecutePermission;

// get the value from cache for the (Scope, Key) combination

cachedResult = DimensionCache::getValue(

DimensionCacheScope::DefaultingCreateLedgerDimension,

[_ledgerDimensionId, _dimensionDefault1, _dimensionDefault2, _dimensionDefault3]);

cachedResult = DimensionCache::getValue(

DimensionCacheScope::DefaultingCreateLedgerDimension,

[_ledgerDimensionId, _dimensionDefault1, _dimensionDefault2, _dimensionDefault3]);

// Check the return value of the container for conNull, if the container is null, there is no value exist for that scope,key combination if (cachedResult == connull())

{

// Main API should have already short-circuited

Debug::assert(_ledgerDimensionId != 0);

xppILExecutePermission = new XppILExecutePermission();

xppILExecutePermission.assert();

{

// Main API should have already short-circuited

Debug::assert(_ledgerDimensionId != 0);

xppILExecutePermission = new XppILExecutePermission();

xppILExecutePermission.assert();

// Do the business process.

cachedResult = runClassMethodIL(

classStr(DimensionDefaultingService),

staticMethodStr(DimensionDefaultingService, createLedgerDimension),

[_ledgerDimensionId, _dimensionDefault1, _dimensionDefault2, _dimensionDefault3]);

CodeAccessPermission::revertAssert();

cachedResult = runClassMethodIL(

classStr(DimensionDefaultingService),

staticMethodStr(DimensionDefaultingService, createLedgerDimension),

[_ledgerDimensionId, _dimensionDefault1, _dimensionDefault2, _dimensionDefault3]);

CodeAccessPermission::revertAssert();

// Push an element into the cache.

DimensionCache::insertValue(

DimensionCacheScope::DefaultingCreateLedgerDimension,

[_ledgerDimensionId, _dimensionDefault1, _dimensionDefault2, _dimensionDefault3],

cachedResult);

}

return conpeek(cachedResult, 1);

}

DimensionCache::insertValue(

DimensionCacheScope::DefaultingCreateLedgerDimension,

[_ledgerDimensionId, _dimensionDefault1, _dimensionDefault2, _dimensionDefault3],

cachedResult);

}

return conpeek(cachedResult, 1);

}

DimensionCache:

public static container getValue(DimensionCacheScope _scope, container _key)

{

SysGlobalObjectCache c;

if(classfactory)

{

c = classfactory.globalObjectCache();

}

else

{

c = new SysGlobalObjectCache();

}

return c.find(DimensionCache::getCacheScopeStr(_scope), _key);

}

{

SysGlobalObjectCache c;

if(classfactory)

{

c = classfactory.globalObjectCache();

}

else

{

c = new SysGlobalObjectCache();

}

return c.find(DimensionCache::getCacheScopeStr(_scope), _key);

}

public static void insertValue(DimensionCacheScope _scope, container _key, container _value)

{

SysGlobalObjectCache c;

if(classfactory)

{

c = classfactory.globalObjectCache();

}

else

{

c = new SysGlobalObjectCache();

}

c.insert(DimensionCache::getCacheScopeStr(_scope), _key, _value);

}

{

SysGlobalObjectCache c;

if(classfactory)

{

c = classfactory.globalObjectCache();

}

else

{

c = new SysGlobalObjectCache();

}

c.insert(DimensionCache::getCacheScopeStr(_scope), _key, _value);

}

Performance impact and memory usage:

Here is a small test which stores or access elements from 10000 to 200000. The table compares 2 sets of tests, one when cache size is set to 10000 while on the other it is increased to 200,000.

| Number of Elements | CacheSize = 10K | CacheSize = 200K | ||

| First Time * | Second time or latter ** | First Time | Second time or later | |

| milliSeconds | milliSeconds | milliSeconds | milliSeconds | |

| 10000 | 387 | 166 | 395 | 172 |

| 25000 | 1014 | 1025 | 797 | 441 |

| 50000 | 2254 | 2197 | 1598 | 938 |

| 100000 | 4743 | 4803 | 3419 | 2049 |

| 200000 | 9088 | 9132 | 8424 | 5023 |

* ‘First Time’ - The Key does not exist in the cache for this scope. Business process is executed to find the value and ‘Key, Values’ are inserted into the SGOC.

** ’Second time or Latter’ – The key for this scope is checked either second time or more. When the SGOC is sized correctly it should find it in the cache. If it is not sized correctly, the element might have been removed by the LRU. If it does not find it in the cache business process is executed again to find the value and ‘key, values’ are inserted back into the cache.

When the cache is undersized elements are removed from the cache to accommodate new elements. The above test does not really justify the usage of SGOC as the performance gain is smaller, unless this is very frequently used or reduces lot of chattiness or Database calls.

When you try to cache the result of complex business logic which is relatively static in nature, you get a significant performance gain you find the values in the cache. The following test result exhibits the importance of sizing the cache adequately.

| Number of Elements | CacheSize = 10K | CacheSize = 200K | ||

| First Time | Second time or latter | First Time | Second time or latter | |

| milliSeconds | milliSeconds | milliSeconds | milliSeconds | |

| 10000 | 227118 | 178 | 235184 | 177 |

| 25000 | 568833 | 563738 | 482203 | 437 |

Other frequent question often comes up is how does SGOC affects the memory footprint. It purely depends on the size of the element you are storing in the cache and number of elements. Storing few integer fields and date fields in SGOC, it used up about 28MB to store 100,000 elements. Whereas when a packed SalesTable buffer is stored, SGOC used little over 200MB.

Best Practices

The SGOC is a very useful tool in some situations, but may not be the appropriate tool in many cases.

DOs

- Size the SGOC correctly. When it is undersized, frequently elements will be removed and added to SGOC. Removing of the elements has higher overhead.

- Use SGOC in cases where caching will reduce intensive calculations, RPCs, database calls.

- Use the SGOC in cases where the same inputs to a method will always return the same result

- Provide a wrapper around SGOC when a subsystem uses it for similar areas. Example: DimensionCache class.

DON’Ts

- Do not use the SGOC if simple kernel data caching will cover your scenario.

- Do not cache results/data that will be frequently changed or updated.

- Do not check if a value exists in cache before retrieving it. Instead try to retrieve it, then check if the result was connull() or not. It will improve performance and may avoid race condition.

- Do not aggressively use the remove() method of the SGOC. Using this frequently will quickly become a performance bottleneck.